Faith

Federated Learning: an eXplainable Interface for Clinicians

Role

Goal

Help clinicians analyze data for accurate depression monitoring and prediction.

Team

Tools

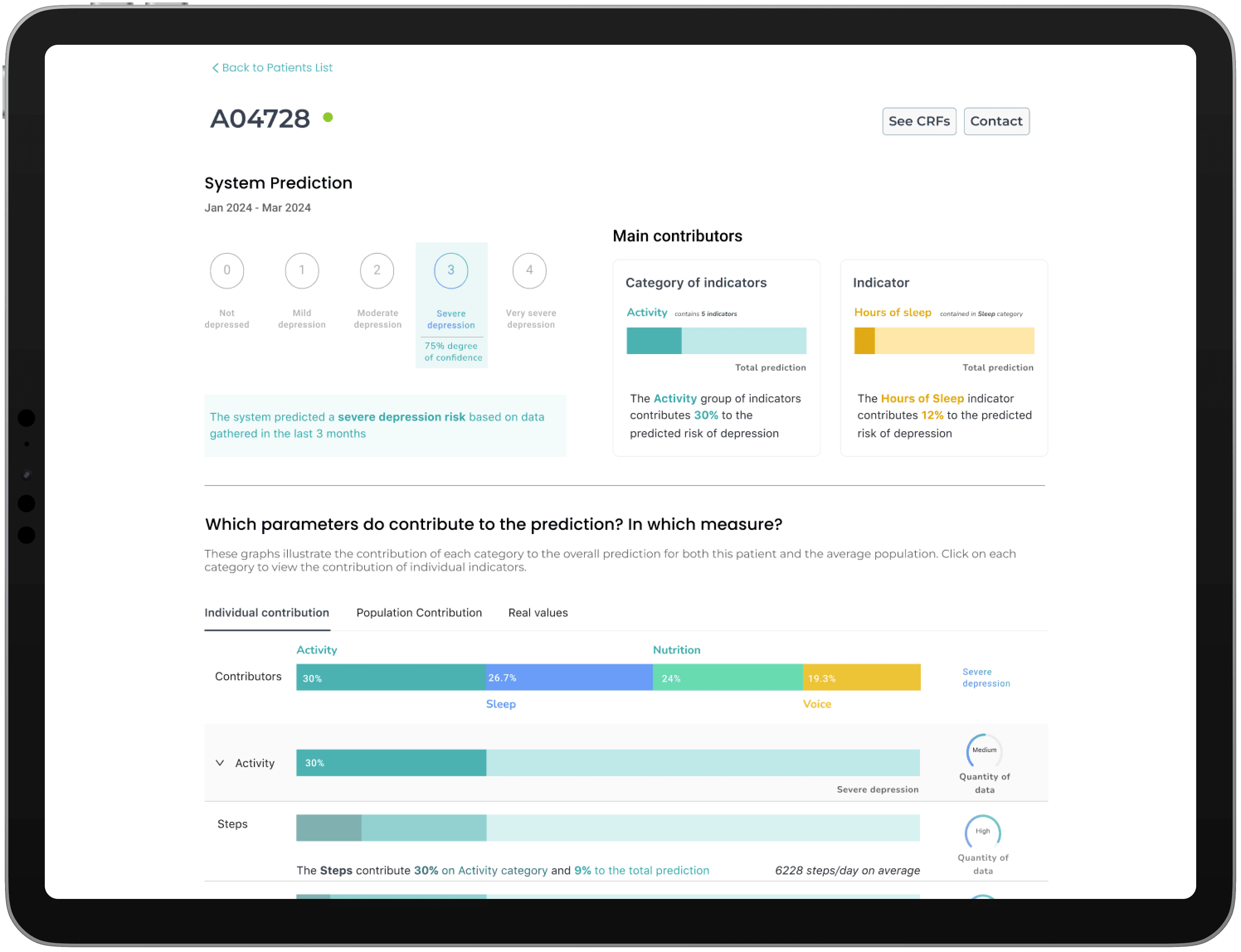

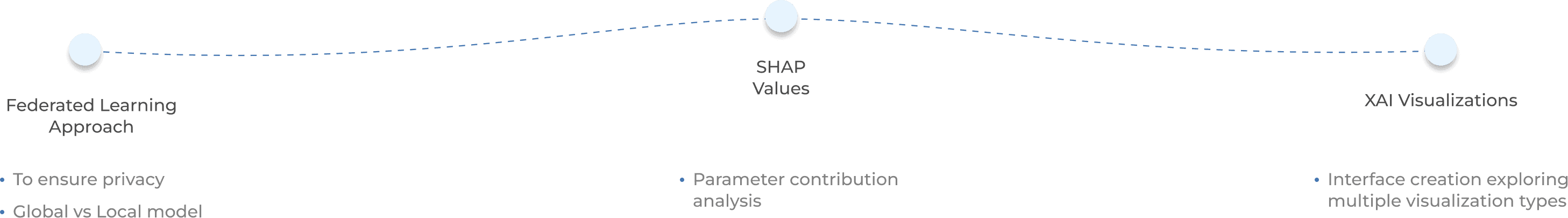

The solution

FAITH is an AI-based project designed to remotely detect signs of depression in individuals post-cancer treatment, using privacy-preserving Federated Learning. By applying explainable AI techniques (SHAP values), FAITH identifies the most influential indicators contributing to each prediction, enhancing transparency and user trust in the results.

The challenge and background

AI is not really "explainable"

prioritise parameters importance

ensure privacy and security

Cancer affects over 18 million people annually worldwide, often leading to significant mental health challenges, including anxiety and depression. While healthcare providers can recognize these signs in clinical settings, they may go undetected when patients return home and resume daily life. FAITH aims to bridge this gap, providing healthcare professionals with early warnings via explainable AI (XAI) interfaces that identify key indicators of depression, supporting timely intervention and enhancing post-cancer quality of life.

Summary of results

Over 100 patients

involved in the trials

3 different visualizations

to analyze indicators' contribution

Privacy and security

ensured through federated learning